Multi-GPU and distributed training using Horovod in Amazon SageMaker Pipe mode | AWS Machine Learning Blog

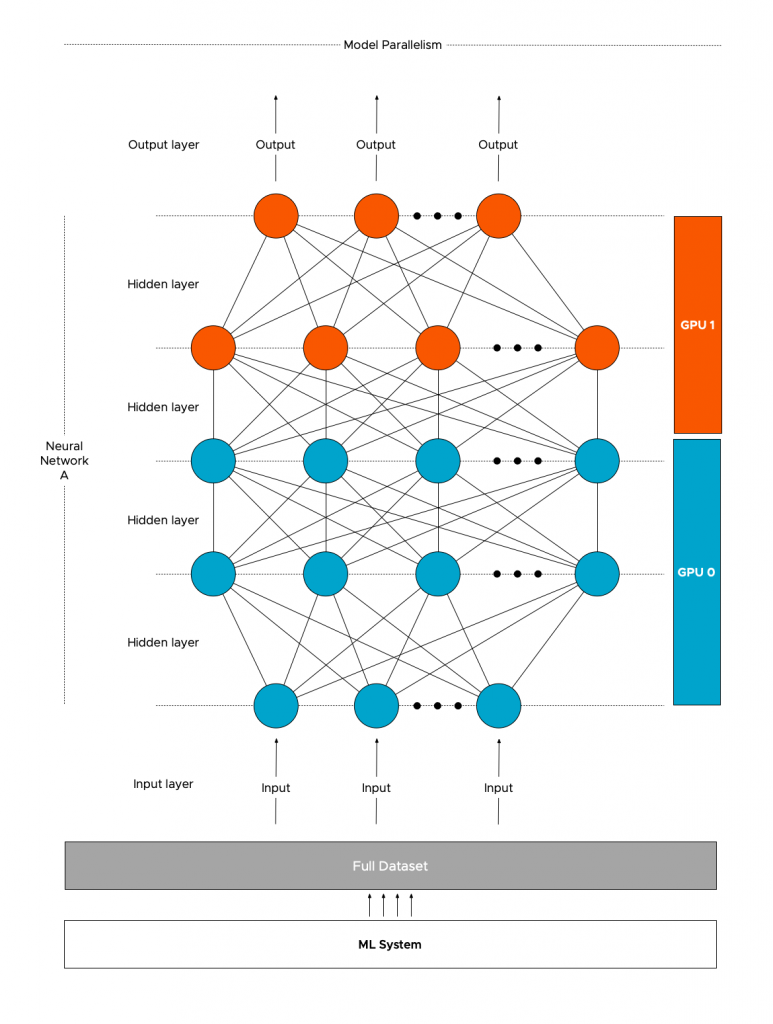

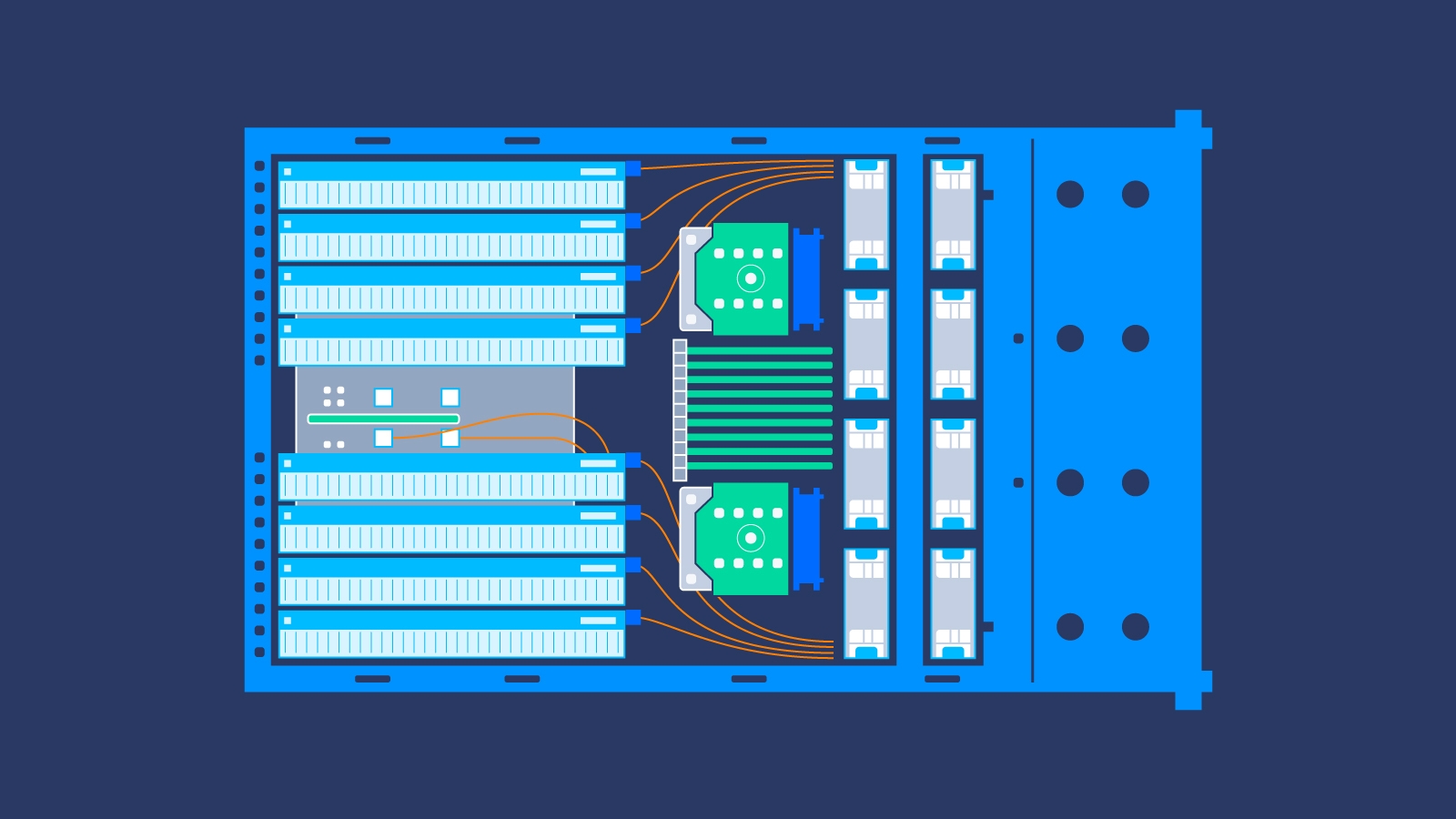

2. Distributed System Multiple GPUs where each CPU node contains one... | Download Scientific Diagram

Distributed Deep Learning Training with Horovod on Kubernetes | by Yifeng Jiang | Towards Data Science

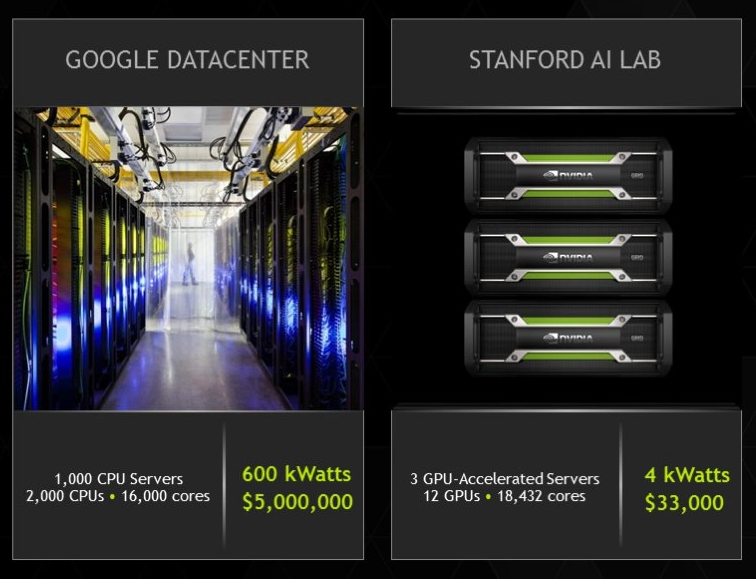

GTC Silicon Valley-2019: Accelerating Distributed Deep Learning Inference on multi-GPU with Hadoop-Spark | NVIDIA Developer

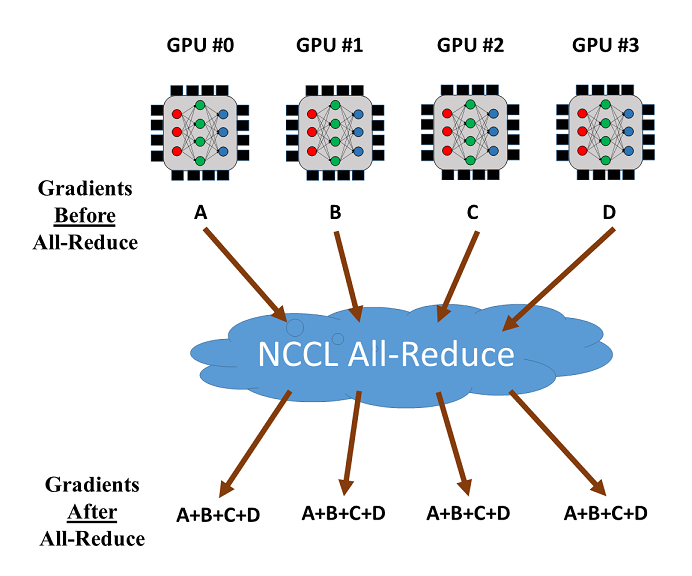

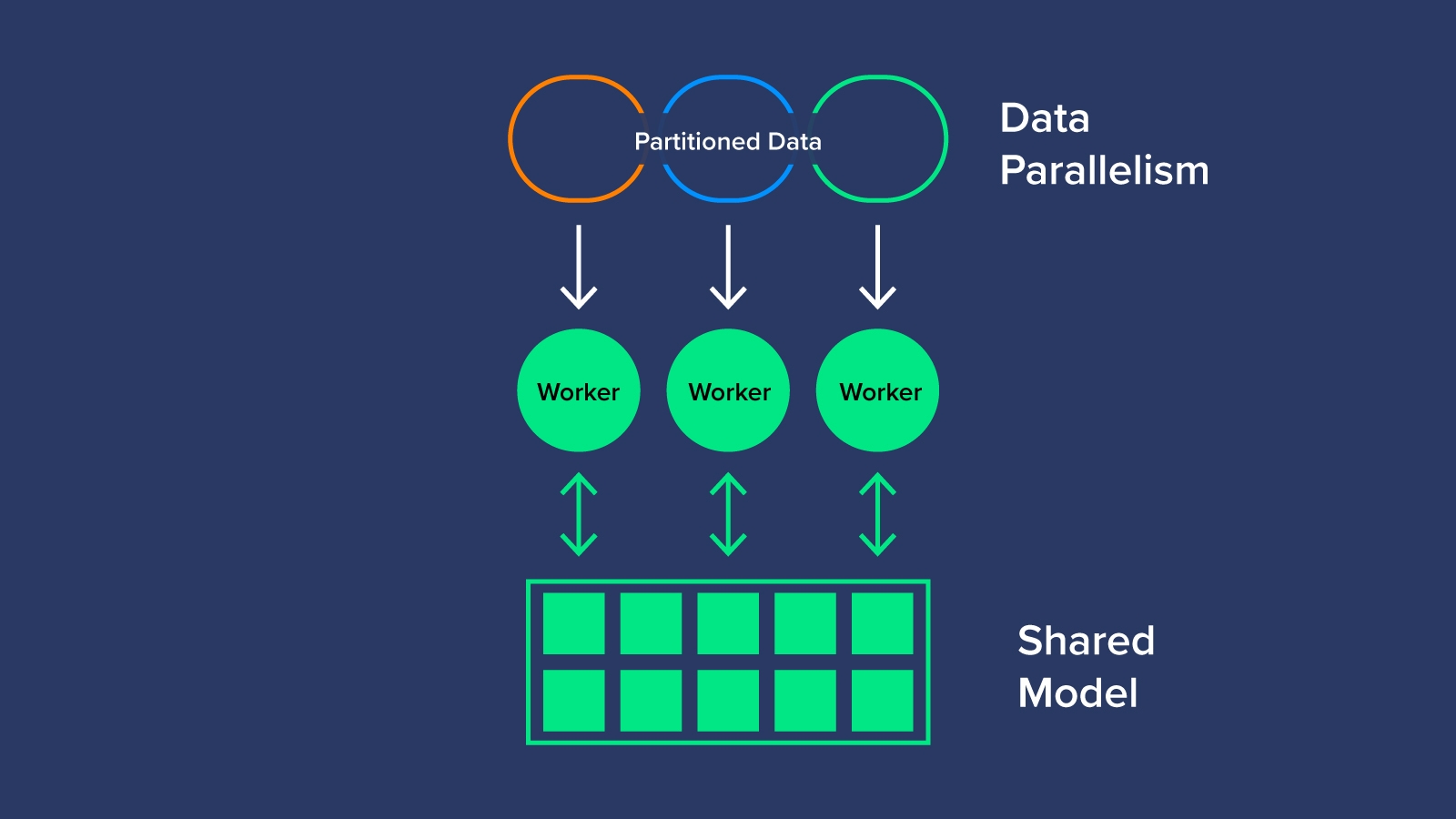

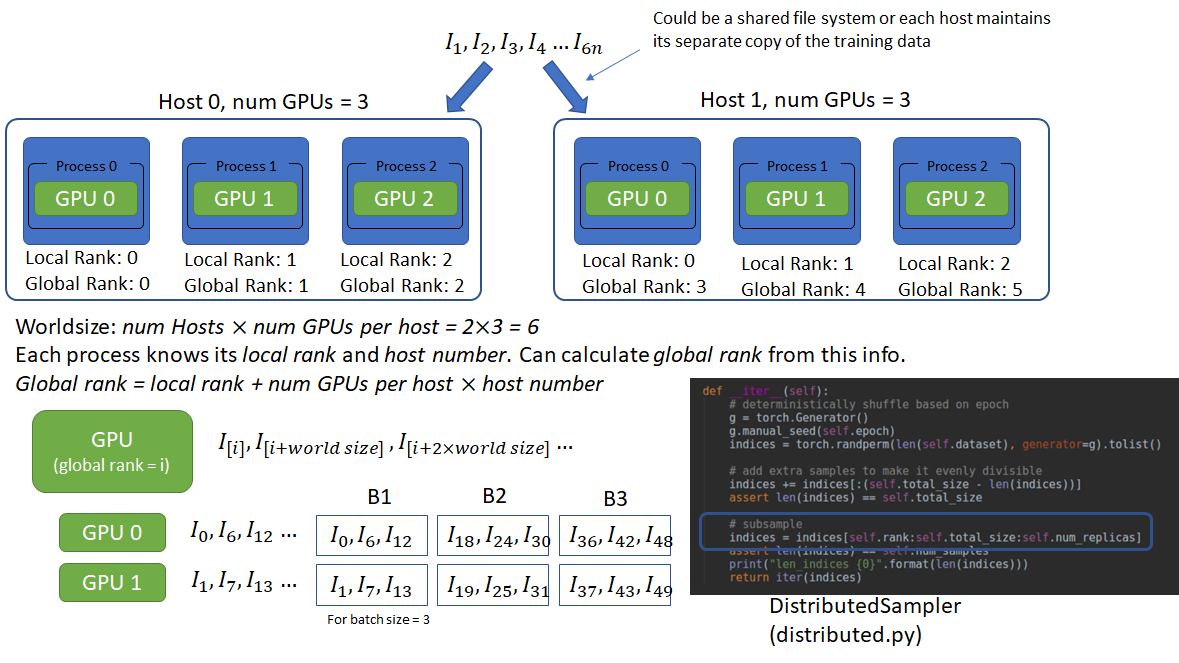

How distributed training works in Pytorch: distributed data-parallel and mixed-precision training | AI Summer

Multi-GPU and distributed training using Horovod in Amazon SageMaker Pipe mode | AWS Machine Learning Blog